Week 10 Machine Learning

Large Scale Machine Learning

Try out the learning algorithm which much smaller sample size, and see if it suffers from high variance problem when small size is small. Only then having many more samples will help.

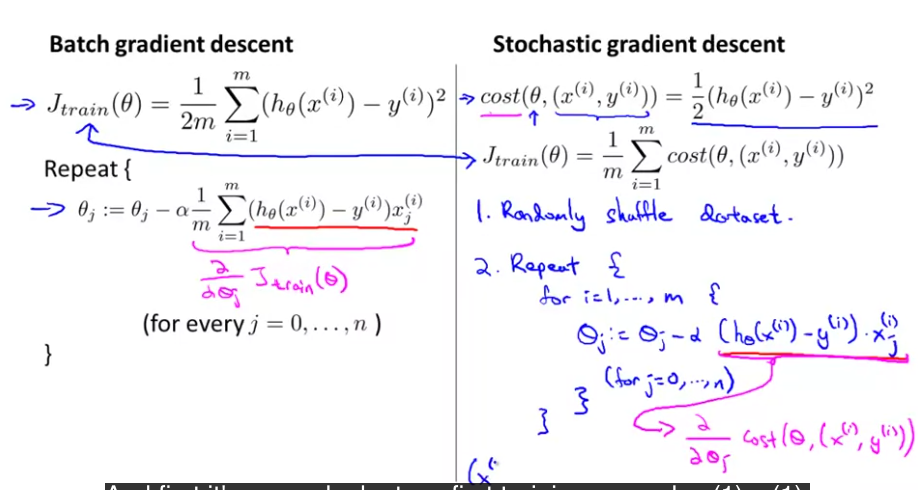

Stochastic Gradient Descent

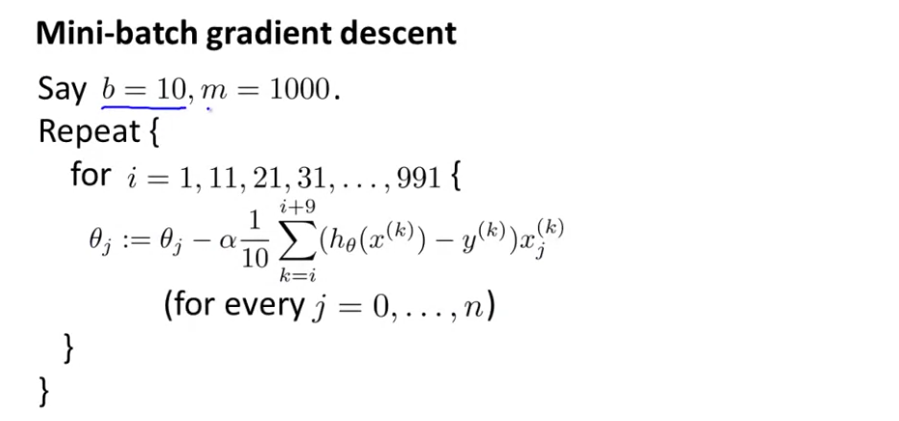

Mini batch Gradient Descent

Notes on stochastic and mini mini batch

- Plot cost averaged over 1000 iterations or similar number.

- If the curve is steadily decreasing, then algorithm is converging, if not adjust appropriate parameters such as learning rate.

- You can use dynamic learning rate. Where learning rate becomes smaller and smaller as number of iterations increase.

Online Learning

- When new data keeps coming in. Streams of data instead of fixed training set.

- Similar to stochastic gradient descent, just that training set is not fixed.

Map Reduce

- Split data and run steps of an algorithm on separate machines.

- Centralized server combines results.